Hi guys, I am Uruj! Welcome to my blog. I mostly post about what I have learned from my academia and research work, and about my involvement in recent projects.

-

Recent Posts

- CV

- Embedded System – What sorcery is this?

- Master’s Thesis – May 2019

- Understanding Multi-Photon Microscopy 4-Pi and Theta Microscopy regarding their Lasers and Optical Set-ups

- Ethical Issues within Medical Data Transfer and Biobanking

- Ethical and Social Issues in Engineering and Technology Practices

- Zebrafish – Tiny yet magnificent creatures!

- Risks within Biomedical Imaging

- Review article on ‘Stem Cells and Cardiac Repair’

- Speak for your lips are yet free, Speak for the truth is still alive. (Faiz Ahmad Faiz)

- Convention on Biomedical Simulation and Innovative Implantable Devices.

- Winter internship- January 2013

- A research on Kazakhstan.

- Biomedical Engineering and other Biological Sciences. Part 1

- What should be the role of business leaders in developing courses and curriculum for business schools?

Archives

Categories

May 2024 M T W T F S S 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 -

Join 55 other subscribers

CV

Posted in Uncategorized

Leave a comment

Embedded System – What sorcery is this?

I joined the FitOptiVis project in March 2020. The project itself is quite big, but the main target was to work with low power design in order to achieve high performance, in specific to image processing applications. In the beginning, it was a bit difficult to understand the project as a whole, especially since I come from a biomedical engineering background, and this entire project was based on embedded systems. In the past, I have worked with medical equipment, microcontroller-based systems, medical devices, image processing and image analysis techniques related to certain designated software in the field of biomedicine and neuroscience, so working with core embedded systems and reference architecture was entirely new, also when the application was nowhere near biomedicine. As a basic definition, I had an idea that an embedded system mainly involves working on microprocessor-based hardware that would also consist of its software counterpart. So for example, during my master’s thesis, I worked with an Arduino microcontroller by programming it through basic C language on Arduino’s own software, in order to control the on and off time of a white LED light source, which in turn became light stimuli for behavioural analysis of an animal model zebrafish to understand certain neurological diseases. In a way, this can be regarded as a small scale embedded system where the software meets the hardware along with certain devices connected.

This was the basic understanding and experience I have had when joining the FitOptiVis project and working on a high-scale embedded system is entirely different. I initially started with the software part where I had to simply use C language to perform testing of an already developed system. Now, this was the tricky part, because what exactly is the already developed system? To my understanding, it was the software design of a System-on-Chip (SoC) that is simply an integrated circuit, which is backed up by an entire memory system. Of course, the entire design consisted of several other components, but my main tasks at the time were to see how the design behaves in software simulation. Just as any basic desktop computer has a processor to execute operations, this SoC (chip) environment was based on a RISC-V processor design. On a larger application, RISC-V has the advantage of being open-source that increases security, especially compared to other architectures, such as ARM and Intel, but because this was a research project, the main idea was to analyse how the chip design is on its software counterpart and the physical chip itself, for which the project had designed its own in-house measurement system.

Initially, I started to run certain online RISC-V tests and observed how the simulation environment behaved towards them. This contributed in defining the limitations of the system, which further helped in developing different software testing techniques using C language. This was also a good way for me to become familiar with the RISC-V architecture and the tools that are normally used in such systems. Further along the project, I developed certain convolution tests using a bunch of basic C looping commands and they also consisted of algorithm based on certain deep learning concepts, so that the tests could behave as though they have neural networking layers: each layer taking input datasets and convolving them as output datasets, and additionally the output became the input for the following layer. The datasets can be regarded as raw pixel data in the form of matrices. Since one target of the project was image processing, such convolution tests were developed that even though real images were not being used as datasets, but the developed tests would behave in a way as if raw pixel data is being processed and also to see that whether the system can handle larger datasets or not.

This was the first project for me where I became familiar with chip designing, so there might be a chance that certain details in this post could be incorrect. The use of FPGA prototyping was another alien concept to me. The full form of FPGA is field-programmable gate array, which I wasn’t sure how to understand, especially since my experience had only been with microcontrollers. A microcontroller can be used for basic specific tasks and FPGA can be used for several tasks. Think of it in this way, a microcontroller is much like the Training Light Saber and the Standard Light Saber, which technically can be used by anyone, but the one who has the potential to control the force will have more advantage. The FPGA can be regarded as the Double-Bladed Light Saber that was used by Darth Maul, which is incorporated with several kyber crystals and is much more advanced.

Learning how to operate an FPGA board was indeed challenging, especially since the project was using a high-level device and it was also very expensive. Before the chip design can be actually manufactured as a physical chip, it is first tested using FPGA prototyping. This was very interesting to learn and perform because once that design is fully loaded on the FPGA device that requires using countless amount of software tools, you run a basic test that prints ‘Hello World’, and when you see that happening, the feeling of happiness is very much similar to how one would feel when an intense guitar solo comes on in a heavy metal song. This entire process is also a way of finding more bugs in the system. I was also able to learn the operation of the measurement system for testing the developed chips and the software tools that go along with the routine testing procedure. Due to certain confidentiality reasons, I am unable to post any pictures or videos of the project, although the project is ending soon so I am hoping that it would be allowed.

Posted in Uncategorized

Leave a comment

Master’s Thesis – May 2019

My master’s degree was in Biomedical Imaging, in which I learned image processing and image analysis techniques within the field of Biomedicine. My thesis was specific towards Neuroscience, where the behaviour of Zebrafish Animal Model towards light stimuli was observed. The image processing and analysis techniques were focused on behavioural analysis. This project was conducted from May 2018 till May 2019, and the abstract and thesis itself, have been attached below.

Posted in Uncategorized

Leave a comment

Understanding Multi-Photon Microscopy 4-Pi and Theta Microscopy regarding their Lasers and Optical Set-ups

Abstract

This review is focused on microscopic techniques of multi-photon microscopy 4-Pi and theta microscopy and the lasers used, further focusing on the optical setups utilised in the mentioned microscopic techniques. The two mentioned techniques have contributed in showing better imaging results through improved superior optical sectioning. In terms of thick samples, multi-photon microscopy 4-Pi and theta microscopy help in providing the benefit of better resolution. The research studies that have been mentioned in this paper have discussed these two techniques. For multi-photon microscopy 4-Pi, the technique uses a 4-Pi microscope and this microscope has an enhanced axial resolution. In 4-Pi microscopy, the enhanced axial resolution is presented by convergence from the excitation illumination, which is placed at the common focal plane of the objective, further initiating a constructive interference. Multiphoton laser sources and CCD camera operations systems are utilised in 4-Pi microscopy that help in analysing samples through sample-by-sample format. Increased resolution is also achieved by theta microscopy. Theta microscopy analyses the sample by using two or more than two objective lenses. One of the objective lenses is used for detecting, and the other is used for illuminating. Even though there is a diverse outlook presented by research studies in this review post about multi-photon microscopy 4-Pi and theta microscopy, there is still the requirement of conducting further research studies about the mentioned techniques in relation with the previous studies, so that these microscopic techniques could be perfected more, and further assist in understanding the electronics and optical setups for achieving better microscopic solutions.

1. Introduction

The field of microscopy involves utilising different types of microscopes in order to analyse entities and regions of those entities, which are not visible by the naked eye. Several techniques within microscopy have been applied over the years in order to enhance the field and to achieve better imaging processes and results from microscopy. This means that within microscopy, the instrumentation and optical setups of microscopes have been altered for providing deep penetration of samples and good images of the samples as well. The microscopic processes are based on the concepts of refraction, absorption, diffraction, and reflection of light beams that encounter the sample, further focusing on the collecting the light signals that could form an image. This also involves the use of different light sources, so that the light beam entering the sample could be penetrating deeply. This study has focused on the techniques of multi-photon microscopy 4-Pi and theta microscopy and their close association with other microscopic techniques by concentrating on the use of lasers and the optical setups of the lasers as well.

2. Multi-Photon Microscopy 4-Pi and Theta Microscopy

Multi-photon microscopy 4-Pi involves the use of a 4-Pi microscope. The 4-Pi microscope comprises of an enhanced axial resolution and can be identified as a laser scanning fluorescence microscope. The axial resolution in terms of 4-Pi microscopy is achieved by converging the excitation illumination that is placed at a common focal plane of the objective, which in turn initiates a constructive interference. The generated constructive interference further decreases the axial resolution towards the range of 100-150 nanometers. The 4-Pi microscope consists of analysing the sample pixel-by-pixel, that is also done within confocal microscopy; however, multi-photon laser sources are used in 4-Pi microscopy along with the CCD camera operation systems.

In a study regarding the 4-Pi microscopy (Ivanchenko, et al., 2007), it was indicated that that the use of 4-Pi confocal microscopy along with the use of two-photon excitation presents advanced optical sectioning in terms of the axial direction and also provides a resolution under 100 nm as identified above. The mentioned study (Ivanchenko, et al., 2007) had applied the 4-Pi microscopy method for cellular imaging and utilised the fluorescent protein EosFP. The EosFP protein is an autofluorescent protein, photoactivatable comprises of the phenomenon of changing its wavelength of fluorescence emission from within 516-nm to 581-nm and with the use of a 400-nm light source.

The discussed study (Ivanchenko, et al., 2007) had presented the measurement of the two-photon excitation spectra and suggested that the proper selection of the wavelengths of the light source would contribute in the two-photon excitation and photoactivation for the EosFP green pattern as a result in the cellular imaging. Higher resolution within cellular imaging can be achieved with the use of 4-Pi microscopy and along with the principles of the multi-photon microscopy. However, in the mentioned study, it was addressed that within the 4-Pi confocal microscopy, the use of photoactivable autofluorescent proteins has an advantage for an increased cellular resolution in terms of cellular imaging as the photoactivable autofluorescent proteins help in visualising the proteins that have been newly synthesised and protein tracking. The 4-Pi multi-photon microscopy provides higher resolution that helps in presenting better results with respect to attaining cellular images. The use of proper fluorescent marker and the design of the 4-Pi microscopy instrumentation further helps in better imaging results.

The theta microscopy can also be discussed in the aspect of achieving higher resolution because this technique contributes in presenting an improved axial resolution in terms of confocal fluorescence microscopy. The generalised idea in terms of theta microscopy is analyse a specimen with the use of two or more than two objective lenses, one lens used for detecting and the other lens used for illuminating. This emitted light is analysed at a specific angle theta with respect to the illumination axis. In a study (Pampaloni, et al., 2013) regarding to performing deep imaging with the use of light-sheet-based fluorescence microscopy for live cellular spheroids, it was indicated that conventional and confocal fluorescence microscopic methods require generally similar lens for processing the excitation and detection in fluorescence.

The study (Pampaloni, et al., 2013) has identified that confocal theta fluorescence microscopy utilises two and three distinct lenses for the processes of detection and illumination as mentioned above. The optical axes for using separate two and three lenses are set together at the 90° angle because this helps in enhancing the axial resolution, further helping in the point spread function because two objectives contribute in showing better response from the imaging system in terms of a specific point source.

With the combination of different microscopic techniques, the spatial resolution can be improved and help in showing in-depth images. The mentioned study has indicated that there have been several experiments performed that have focused on techniques of confocal theta fluorescence, stimulated emission depletion (STED) microscopy, 4-Pi confocal microscopy, and stochastic fluorescence, and these have shown promising results in terms of cellular imaging over the years. In the case of developing instrumentation for microscopy where two or more microscopic techniques are combined, it is necessary to focus on different challenges of the high-resolution.

For instance, there is a possibility of facing precision issues when combining techniques because the arrangement of the instrumentation could alter the position of lenses and light sources, which could also involve issues within surface topography. The reason for mentioning the issue about surface topography here is because surface topography refers to the nature of the surface that involves of how a certain surface is deviated from a plat plane. The study performed by (Pampaloni, et al., 2013) has indicated the combination of the method confocal theta microscopy and 4-Pi confocal microscopy has been proposed as a viable technique in terms of enhancing resolution. However, in order to understand the combination of the two techniques, it is necessary to initially analyse the optical setups for both the mentioned microscopic methods.

3. Optical Set-ups

3.1 Optical Set-up of Multi-Photon 4-Pi Microscopy

(Ivanchenko, et al., 2007) indicated in their work that previous research studies have reported the similar type of optical setup for 4-Pi Microscopy. In another related study regarding the optical setup for 4-Pi microscopy (Peters & Koumoutsakos, 2007), the study focused on the use of a confocal microscope within the field of fluorescence microscopic research studies. A confocal microscope in terms of removing the out-of-focus light that enters the detector for enhancing the imaging along with the usage of multi-photon excitation could also enhance the resolution power in this context. However, the mentioned study (Peters & Koumoutsakos, 2007) had identified that the concept of Abbe’s diffraction barrier has been associated within the optical resolution in microscopy and that the diffraction challenge was encountered in the 4pi setup. Even so, with the challenge of Abbe’s diffraction, the concepts of 4-Pi microscopy and other microscopic techniques such as stimulated emission depletion (STED) microscopy, the Abbe’s diffraction problem has been eliminated and the resolution has been improved.

The basic optical setup and the components utilised within the 4-Pi microscopic as defined by (Peters & Koumoutsakos, 2007) has been shown in the diagram below. The light beam coming from the coherrent laser light source is divided into two pathways with the help of a beam splitter (BS) as shown in the following diagram in the red coloured pathway. The light beam is then transmitted through mirrors, which directing the laser light towards the objective lenses that are setup opposite to each other. The two pathways of the laser light beams are super-positioned at the common focal point on the sample as indicated in the diagram between the lenses. When molecules of the sample are excited in the mentioned condition of superposition, there is emission of fluorescence light from the excited molecules. The mentioned fluorescence light is then collected through the two objective lenses as shown in the diagram, which is then combined with the help of the beam splitter identified earlier and then the fluorescence light is deflected with use of a dichroic mirror towards the detectors in the instrumentation of 4-Pi microscopy. Each of the objective lens has the angle 2π of the light that is collected and with the use of two objective lenses in this context, the angle of 4π of the light is collected from all the pathways.

3.2 Optical Set-up of Theta Microscopy

The study performed by (Dwyer, et al., 2007) had utilised a laser diode of 830 nm was used as the light source for the setup. This laser diode of 830 nm light source helps in presenting optical sectioning, along with depth penetration. The study had properly identified all the specifications of all the components that had been utilised for theta microscopy as depicted in the figure below.

There is a divider strip near the objective lens, which has been placed there in order to differentiate the two pathways of illumination and detection. There is a combination of the two lenses in this case, one being the objective lens and the other being the cylindrical lens. This design helps in scanning the illumination pathway in the x direction by using the M2 mirror as shown in the above figure. The objective lens collects that portion of the light, which could be backscattered in terms of the object plane and the light is further descanned through mirror M3 as shown in the figure 2. The study (Dwyer, et al., 2007) had indicated that a galvanometric scanner had been used that contributes eliminating any sort of fluctuations in the microscopic setup and further helps in the synchronising the pathways of detection and illumination. The light is collimated, which is focused with the lens L4 here in figure 2 and is further transmitted through S1 slit. The study had used the CMOS line detector in order to detect the light. Two lenses, cylindrical and objective lens are use in theta microscopy that provide orthogonal lines from the objective lens, out of which one line is the primary line that is constructed through the focus of the objective lens and help in imaging the sample.

4 Lasers in Optics and Microscopy

4.1 Use of Lasers within Multi-Photon Microscopy 4-Pi

In a study regarding microscopy and lasers (Kuschel & Borlinghaus, 2014), it was identified that within multiphoton microscopy, it is necessary to utilise the nonlinear process regarding the excitation of molecule through energy because the laser is very efficient in terms of higher intensities. The excitation process is based on the absorbed photon concentration, for which an increased photon density is needed. In this case, pulsating the laser energy increases photon density because this creates a lower rate of pulses due to which there is more energy being generated between the pulses. In order to have the 2-photon effect, short pulsed lasers are used for which the titanium sapphire laser is a suitable laser because it used at proper output power for the 2-photon effect and has the pulse lengths of hundred femtoseconds. Fibre lasers are also used in multi-photon microscopy 4-Pi. This is because fibre lasers comprise of a stable beam profile, which contribute in attaining an increased output power. Maintenance of the fibre lasers are not required.

4.2 Use of Lasers within Theta Microscopy

Laser technology is a diverse field consisting of different innovations. At the initial stage of confocal microscopy in the middle of 1980s, the light sources that were used in this context had been HeNe lasers (Kuschel & Borlinghaus, 2014). This is because such lasers are compatible with samples and specimen and could be handled in an easy manner. However, the HeNe laser had the range of 633 nm, which was a far-off range for most of the fluorochromes. In addition, there had been another type of the HeNe laser with the range of 543 nm that presents a good source for the fluorochromes that belong to the mid-range. Even so, the mentioned lasers are bit dim, which is why they would emit 0.5 mW. The Ar-Ion gas laser had shown good results at the ranges of 488 nm and 514 nm. The Ar-Ion gas laser had been very successful for the fluorophore fluorescein in specific. Considering the present area of microscopy, Ar lasers are still being utilised because they present five distinguishing excitation lines for blue/blue-green range of the visible spectrum.

Even though, gas lasers are still being utilised within microscopy, the use of solid state lasers and also the diode lasers have shown better stability and they do not produce heat, consequently that any sort of cooling system is not required. Pulsed lasers are mostly used in this context as defined above and can be restricted through the emission of a single wavelength. In terms of dealing with several parameters for instance in fluorescence related experimentation, lasers can be combined. With respect to the UV dyes, shorter wavelength excitation had been utilised through the use of diode lasers under 405 nm. There was also the use of Ar-UV lasers of 351nm and 364nm for specifically measuring calcium in samples (Kuschel & Borlinghaus, 2014).

When it comes to analysing different samples, observing them live is always a major priority. However, in terms of analysing the depth of a living sample, scattering is initiated because of a complex refractive index that is similar to the cellular structures being crowded within a sample. Longer wavelengths help in decreasing scattering that gives better images (Kuschel & Borlinghaus, 2014). For achieving deep penetration, red and infrared laser light sources and fluorescent labels in microscopy are used.

5 Conclusion

This post has focused on the techniques of multi-photon microscopy 4-Pi and theta microscopy and their instrumentation. These techniques have provided the improvements of presenting superior optical sectioning when it comes to greater depths within highly thick specimens and there is the advantage of increased resolution. The techniques of multi-photon microscopy 4-Pi and theta microscopy have decreased phototoxicity. Even though, the mentioned researches in this review post have presented a diverse outlook of the defined techniques, but there is still a need of current studies to be accrued out based on the aspects given by previous studies. This will help in perfecting the two defined techniques and also help in understand the electronics and the use of optical setups for having better microscopic solutions.

6. References

– Dwyer, P., D. C. & Rajadhyaksha, M., 2007. Confocal theta line-scanning microscope for imaging human tissues.. Applied optics, 46(10), pp. 1843-1851.

-Ivanchenko, S. et al., 2007. Two-Photon Excitation and Photoconversion of EosFP in Dual-Color 4Pi Confocal Microscopy. Biophysical journal, 92(12), pp. 4451-4457.

-Kuschel, L. & Borlinghaus, R. .., 2014. Advanced microscopy/supercontinuum white light: Multiple microscopy modes in a single sweep with supercontinuum white light.. Bio Optics World.

-Pampaloni, F., Ansari, N. & Stelzer, E. .., 2013. High-resolution deep imaging of live cellular spheroids with light-sheet-based fluorescence microscopy.. Cell and tissue research, 352(1), pp. 161-177..

-Peters, R. & Koumoutsakos, P., 2007. Fluorescence Microscopy, s.l.: University of Illinois at Urbana-Champaign.

Posted in Uncategorized

Leave a comment

Ethical Issues within Medical Data Transfer and Biobanking

- Introduction

Biobanking has become an important process in drug development for collecting and storing biological samples that are used in research studies. Biobanks cover a diverse range of sample repositories that are associated with different infrastructure networks, worldwide. In the field of drug development, it becomes essential in collecting biological and clinical data as part of biobanking. The collected data from human subjects is also based on maintaining the legal rights of those human volunteers. Drug development aims towards commercializing new pharmaceutical drugs with precision, which is why it is significant to understand the relation between biobanking and drug discovery, and the ethical issues that arise in this context. In this post, I will discuss how the biobanking process works closely with drug development approaches, and further describe ethical issues associated with the use of biobanks in drug discovery methods.

- Biobanking and Drug Development

Biobanks support in progressing the scientific process of personalized medicine. This approach helps in seeking medical treatments with respect to each patient and their certain characteristics. For researchers, it is necessary to understand the molecular and genetic profile of patients, which makes them susceptible to specific diseases. Samples are collected based on the population, disease type, and whether the disease has originated from a wide range profile of people. This is why biobanks are divided into different categories of research, clinical studies, diagnostics, and pharmaceuticals. In my current research, I have observed that initially the details regarding biological variations of a person, life, and societal factors are considered when dealing with blood samples. The main aim of my project is to analyze problems at the cellular levels for understanding bone diseases, so when we receive blood samples from patients, it is important to know factors of age, gender, and so on. This information further helps in studying the complexities of the disease.

In drug development, there is always a high chance of toxicity reactions, whether certain chemicals could trigger allergic reactions in a patient. This is why the preclinical and clinical phases of drug development are crucial in producing safe medicine. In my research group, we are designing drug-testing protocols at the cellular level, first. As the experiment progresses, we will move towards animal studies, in order to have safe drug products. However, initially, the samples are from human subjects, which raises an important question of ethical issues in this case, such as sample ownership, the sustainability of biobanks, and so on.

- Ethical Issues with Biobanking in the Drug Development Process

There is a high potential seen in biobanks and health databases, however, at the same time being accessible to sensitive data makes it unsafe for human rights. During the training period of a researcher, it is necessary to understand the documentation provided by health organizations when dealing with human biological data. It becomes equally important for volunteering individuals to be aware of regulations that are applied in collecting their data. One specific document that I went through is the Declaration of Taipei, which aims in balancing the rights of people providing their data and privacy regulations. In addition, the declaration supports how health data is an essential tool for enhancing scientific knowledge.

Biological materials are obtained as samples from living or deceased people. These samples present biological detail regarding a certain subject analyzed. The samples are collected from an entire population and individuals, which increases concerns about privacy, discrimination, and confidentiality. This is why ethical considerations should be addressed in an organized way to the researchers and individuals involved in research studies. Such practices can be applied at the education level in all types of disciplines. This will help in developing coordination between advancing scientific studies and the protection of individual rights, which are associated with the biological data received from them. Such guidelines present ethical directions about problems involved in the actions that are related to handling the biobanks.

- Ownership of Biological Data in Drug Development

I attended a workshop regarding drug development, and one major situation discussed was the use and misuse of biological data and biobanks. The political, administrative, and commercial use of health data provides risk in this field. The guidelines should not be limited to research; otherwise, researchers will become unaware of risks that are associated with commercialization, and cost-cutting in the medical field. Human data samples are collected by research biobanks either for their own research studies or for research of third parties. Ownership of the samples has been a conflicting situation to this day, mainly because it indicates how ethical and legal issues should be considered in biobanking and drug development together. In addition, consideration should be provided to the human subjects, researchers, and organizations that are involved. However, human rights that are recognized worldwide demonstrate that an individual cannot own another individual, mainly because it constitutes aspects of slavery. In order to avoid such conflicts, many biobanks represent themselves as custodians, rather than mentioning themselves as owners of the samples. A similar practice is recommended when the samples are passed towards research fields. Although, such practices are different from organization to organization.

During my bioethics course, in a case study regarding the International Agency for Research on Cancer (IARC), it was indicated how there is no ownership when it comes to biological samples. It was further mentioned that the involved biobank has the right to assign the associated parties either the ownership or custodianship. Even though the responsibility is provided to the biobank, however they still need to follow the institutional and national guidelines before assigning them. This involves the human subject volunteers and the biobank; however, we are missing out on the researchers that are also related to the biobanking process. Scientists who help in collecting the biological data are equally rightful to consider that data as “theirs”. This creates an argument that principal investigators could ask for access to certain databases, or they could enforce co-authorship when publishing. This could also create the question of having control over the samples because of scientific competition, thus leaving this as a current and future argument.

- Conclusion

In the field of drug development, it is important to learn the involvement of biobanking in research processes and develop skills at the education level. Workshops, training, and courses should be organized frequently, which makes it easier to understand the current ethical and legal issues that are related to handling the human sample database.

Posted in Uncategorized

Leave a comment

Ethical and Social Issues in Engineering and Technology Practices

- Introduction

In this day and age, the engineering profession is progressing increasingly. The ethical and social issues associated with this profession, are becoming an important part of technology and engineering degree programs. My academic and research work is a combination of Engineering, Technology, and Biomedicine. In this post, I will be focusing on engineering and technological aspects, and in another post, I will specifically address issues related to the medical field.

- Engineering and Technology Practices

Engineering and technology practices are applied at earlier stages in degree programs. In most of the cases, such practices are initially developed from theoretical perspectives by including case studies and brainstorming with students. From my own educational experience, during the 5th semester of my bachelor studies, we were mostly given tasks of technical problems and designing new technologies. We were not only expected to provide solutions but also discuss if these tasks occur in fields of manufacturing, research and development, sales, and as such.

One specific project was based on providing the design of an electrical muscle stimulating technique that could be used for diagnostic or therapeutic purposes. A major challenge in designing such a technique is related to the circuit design and implementation. This is why we are trained through courses and internships for practicing our software and electronics skills. Initially, we are taught circuit designing and troubleshooting methods, so that once we are working practically on a certain circuit and something goes wrong, we are able to identify the problem and rectify it.

- Approaches for addressing Social Issues in Engineering and Technology Disciplines

During our internships, we are given an overview of how ethical and social approaches are applied in a professional setting. Technology usage has become a huge part of every discipline, which is why training engineers and technologists with proper guidelines are crucial. This platform is initially provided in internship and training opportunities. Most of the internships and training programs that I did during my bachelor studies were in healthcare settings and research-based projects in universities. In healthcare settings, I worked mostly in Biomedical Engineering departments, where engineers and technicians dealt with healthcare equipment.

One social aspect was teaching all employees on how to handle and troubleshoot machinery. This involved the use of gloves, cleanliness, and disinfectant solutions that are recommended when handling electronic machinery. Another social aspect was the responsibility of a certain task, for which we were trained in how to deal with a certain task. In the case where we had supervision, we would discuss how to address the problems of a specific task and their respective solutions. While working in a team, we were expected to provide our own ideas and solutions and work together with a joint conclusion. In terms of having conflicts, rather than observing them as arguments, we were taught to consider them as constructive feedback. In the situation where we were expected to work without supervision, we were advised that in case of consulting other ideas, we could always ask for help.

During internships and training, there is the opportunity to learn how to become independent with your skills, but within engineering and technological practices on heavy machinery, it is always a good option to ask and receive supervision every now and then. Since these machines were operated in a healthcare environment, one important social aspect was to observe the social impact of the engineering practices that are being applied. This mainly included methods of risk and hazard assessments including engineers, technologists, patients, and healthcare staff.

- Ethical Approaches for Engineering and Technology Practices

Healthcare professionals handle the medical equipment with patients, which is why it is necessary for the technical staff to give a proper description and overview of new equipment, troubleshooting problems, and so on. This social interaction was also taught by introducing us interns to other staff members in our training. Besides understanding this as a social issue, it is also essential to use ethical approaches that should be based on virtues. Moral qualities were also taught in how to have honesty and respectfulness towards patients and staff, belonging to every department. Ethical approaches in-line with the ethical codes that are provided by professional engineering organizations should be considered when making decisions in projects. During my final year of bachelor studies, we were assigned group projects and expected to follow proper ethical guidelines.

One major ethical approach taught to us was decision-making based on consequences. In our Ethics course, it was mentioned how decisions within a group should make all the members happy. This is why, before starting our group project, a meeting with our study advisors was held, in which main points were discussed. Firstly, decisions should be made regarding the engineering project assigned to our group and it will be the main aim of our study. Secondly, a proper description of the technology that needs to be developed as a group project was addressed. Thirdly, the social aspects associated with the project were kept in mind. Lastly, expected effects should be analyzed as the project progresses. We worked on developing medical devices, which is why understanding social interaction between healthcare staff and engineers is important.

The above-mentioned points provide a foundation for dealing with ethical issues within engineering and technology practices. They reflect on prioritizing decision-making when people work in a team and how each member should receive an equal amount of opportunities. When it comes to deciding a specific technology that becomes the focus of the team, all members should be involved in making such a decision. This provides a platform for making our skills better and helps in keeping an open mind about different ideas.

- Conclusion

The first essay addressed the importance of developing approaches that help in solving ethical and social issues that are observed in engineering and technology disciplines. It becomes essential to focus on such practices at the education level, so it becomes easier to develop and apply such skills once a person enters into a work environment. Case studies at the classroom level also provide good insight to students and a better understanding of ethical and social concerns.

Posted in Uncategorized

Leave a comment

Zebrafish – Tiny yet magnificent creatures!

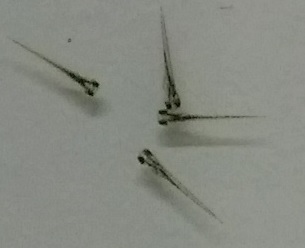

As an animal model, Zebrafish has been very effective in research studies. Besides its similarity to human physiology, other advantages include its fertility, availability and reproducibility. At the time when an Adult female zebrafish lays embryos, their range could be between 50 to more than 100 embryos. This provides a larger sample size for experimentation. However, if zebrafish mating interacts with stress stimuli, the mating could be unsuccessful and no embryos are produced. It becomes essential to provide a calm environment when handling zebrafish models. The size of an adult zebrafish is somewhere between 4 – 5cm, but what is more interesting is the response of zebrafish embryos in behavioural and drug screening studies. The age of zebrafish embryos is counted based on the number of days after fertilization. This means that the day on which a female adult zebrafish lays the embryos, that would be regarded as 0 days post fertilization (dpf).

The Animal Licensing regulations are applied around the time when these embryos develop their swim-bladders, which is usually around 5dpf. Before this 5dpf, any researcher with proper instructions and practice can handle zebrafish without any license. However, for researchers without zebrafish license, it is suggested to handle them between the time frame of 0dpf – 4dpf. This is because the swim bladder development usually occurs between 4-5dpf, so it is better to plan experiments accordingly and that the researcher sacrifices the embryos either when 4dpf is ending or earlier on 5dpf. Most of the studies that I have conducted were also planned this way.

Usually, when the embryos from 0dpf reach to 1 and 2dpf, they are developed into their larval form, which is why terms such zebrafish embryos and larval zebrafish are used interchangeably for samples under 5dpf. More than 50 larvae can be placed within a single laboratory petri dish as shown below in Figure 1. They are placed within E3 medium that is specifically prepared for zebrafish embryonic development.

Figure 1. – Zebrafish Embryos in a Laboratory Petri Dish – These are 4 days post fertilization (dpf) old. Their body length is approximately 3.7mm.

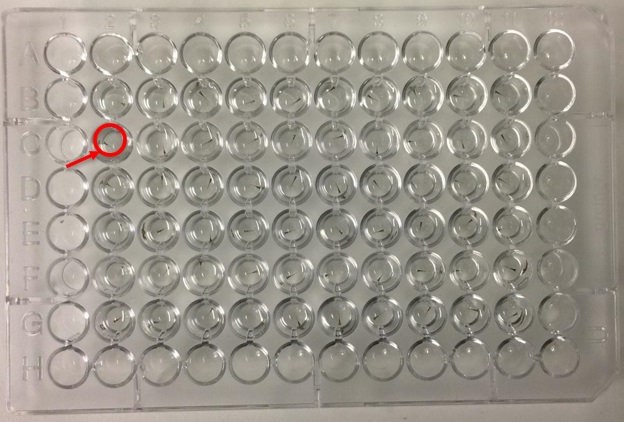

Based on their large number of samples and smaller body length, it becomes easier to transfer them to well-plates (A 96-well-plate with zebrafish is shown in Figure 2). It is also suggested to use pipetting techniques for handling such smaller zebrafish models by simply cutting the tip of your pipette. This provides a larger diameter for catching zebrafish in a pipette. With the regular pipetting tips, it damages the zebrafish body and it dies immediately.

Figure 2. – Zebrafish Embryos in a 96 Round Bottom Well-plate – These are also 4 dpf old and were transferred from a petri dish into a well-plate, using pipetting techniques. Notice that the wells on the edges have no embryos in them. This is because the medium could evaporate from them, hence preventing the edge effect.

Zebrafish are very responsive to different types of stimuli, even when a researcher simply picks up the petri dish and places on another surface. They start to move very rapidly when encountering stimuli. Even if you slightly tap the lid of the dish, they tend to move. The following gif files show the movement of the embryos. Sometimes, the E3 medium is coloured to observe zebrafish without their pigmentation. The embryos appear transparent and a coloured medium helps in locating them.

It is very interesting to observe the body of 3.7mm long zebrafish, closely

Figure 5. – Zebrafish Embryos 4dpf

In further posts, I will discuss their behavioural responses such as a Startle Response towards different types of stimuli, and also imaging techniques that are being used for behavioural analysis and drug screening.

Posted in Uncategorized

Leave a comment

Risks within Biomedical Imaging

About two years ago, the following question was addressed in one of the lectures in a course named Bioethics.

What kind of risks are involved in your own field of research? Is there a risk evaluation or risk government plan? Try to evaluate the management and ethical acceptability of such risks.

My master’s program was in Biomedical Imaging, so I selected this as my field of research. It is a two-year program, and I graduated from it in July 2019, Turku, Finland.

- Introduction

This post highlights the field of biomedical imaging in terms of focusing on the risks associated with the discipline itself. Biomedical imaging refers to the area where different medical techniques are applied in the context of projecting visual images of the human body or focusing on specific organs and tissues within the human body and animal models for research purposes as well. Concerning the aspect of risks in biomedical imaging, radiation exposure having an impact on public health has been of major concern, which is why the public tends to stay away from imaging examination in some cases as indicated by Ulsh (2015 ) in their research. This post further highlights risk management and ethical acceptability of the associated risks within biomedical imaging.

- The Field of Biomedical Imaging

Biomedical imaging involves the course of generating visual images of the human body as mentioned above, which could be utilised for clinical use and medical purposes. The field also includes analysing the highlighting the physiological functions of the human body and diagnosing and further treating different diseases. The field has contributed to providing a proper platform for understanding the disciplines of anatomy and physiology. The area of biomedical imaging involves the examination, enhancement and viewing the images that are generated through the techniques of x-ray, computed tomography (CT) scan, magnetic resonance imaging (MRI), ultrasound, optical imaging, and such techniques. The images are constructed through different modelling principles to display the images.

- Risks in terms of Biomedical Imaging

The study mentioned above (Ulsh, 2015 ) has identified the presence of carcinogenic risks concerning biomedical imaging. Worldwide the topic of radiation exposure towards the public concerning medical imaging techniques has been focused, which could be major because of the usage of computed tomography, as indicated by Ulsh (2015 ). Medical societies have been constantly recommending in contradiction to increasing the small doses that are acquired by large populations in terms of identifying cancers of excessive induced radiation. This is due to the chances of astounding claims could be made regarding health that has not properly taken the concerned uncertainties, which are involved in this context. The discussed study (Ulsh, 2015 ) has further indicated that even so, several research studies have identified predictions regarding future cancers from the use of CT scanning, which has contributed in increasing the concerns amongst patients and public in general.

-

- Commonly encountered Risks in Biomedical Imaging

From the above-defined scenario regarding risks in biomedical imaging, commonly encountered risks can be identified. Besides the issue of dosage, misdiagnosing is a risk in this context. This could be due to having a poor medical image, or even by providing inappropriate isotope dose to the patient. The use of poor equipment and the involvement of malfunctioning could damage the concerned body part of the patient. If for any reason those risks have been ignored by the professional, it could cause problems for the patient and even in clinical research. Poor documentation of the patient data or not properly reporting the details could create problems. Malpractice in this aspect also leads to risks for the patient.

- Risk Evaluation and Assessment within Biomedical Imaging

The study (Ulsh, 2015 ) has reviewed different radio-epidemiological researches by indicating that such studies initiate with the use of a null hypothesis projecting no relation amongst the factors of exposure from ionizing radiation and the aspect of cancer risk. This indicates the aspects of risk evaluation within biomedical imaging. It was further stated that if there has been evidenced provided for rejecting the proposed null hypothesis, which involves that radiation exposure contributes in increasing the chances of cancer in terms of the dose used; in such a case the alternative hypothesis is accepted. However, in the case where any specific research fails in examining the extreme change for cancer risk in relation with the dosage, this means that the study has failed on the hypothesis that cancer risk is increased due to radiation. Even so, this risk evaluation has been in terms of research studies that are conducted in this study area. It is highly important to focus on the risk evaluation made by medical authorities within the field of biomedical imaging.

In another related study (Hricak, et al., 2011) that was focused on highlighting risk management in terms of medical imaging indicated the factors associated with biomedical imaging. The study has indicated the generalised procedure of evaluating and identifying risks in this regard that is applied worldwide, which initially involves that the concerned hazard in terms of radiological processes should be indicated. The next step involves that the operation of biomedical imaging would harm which people, i.e. the associated staff and patients. Understandably, the employees working and the related patients are the first priority to be considered when it comes to potential harm from imaging techniques. This aspect further involves conditions such as pregnancy, patients being sensitive to certain materials, age-associated problems, which basically differs from individual to individual.

Analysis of the harmful conditions that could occur should be predicted. This is because when there are adverse results from either clinical researches or even simply conducting an examination on patients, involved professionals encounter lawsuits. Another question arises that when a specific hazard has been indicated, the medical practices should then be enhanced to provide protection for the people against the harmful situation. However, the changes within the medical practices should in line with reasonable procedures, which means that protection standards should be created in institutions where such risk evaluation might not be applied (Hricak, et al., 2011).

In a study regarding the ethical considerations in biomedical (Mijaljica, 2014), it was highlighted that for risk assessment, policies, and procedures should be well-defined for imaging safety. Accuracy regarding documentation is highly necessary, along with the factors of communication and customer service as well. As mentioned above regarding the dosage for radiation, it is important to have controls regarding radiation exposure.

- Ethical Acceptability of Risks in Biomedical Imaging

Concerning the study performed by Mijaljica (2014), it has been identified that the factor of ethical acceptability varies from country to country. This also refers to the course of bioethics itself that is being taught in different schools worldwide because students associated with the aspects of biomedical imaging sometimes perceive the subject lightly and might not focus on its importance. For instance, in the case of medical students, they might not consider the subject of bioethics as important in comparison to their other medical courses. This indicates the future behaviour of medical professionals and how such practices at the educational level could create problems in the future.

Negligence could be identified as a major risk because it is necessary for the involved professional who is conducting the examination with the use of medical imaging techniques, they should be aware of the risks associated. Regarding the biomedical imaging techniques, they are focused on a variety of sensors and computational methods that contribute to providing the images. These can be applied for monitoring patients in terms of diagnosing and further treating diseases (Webb, 2017). However, in such conditions risks are associated in the context that for instance, a patient might have an implant, which is why instrument designing and proper methods should be applied for imaging techniques.

- Conclusion

This post has reflected that most of the risks are about the malpractice and neglecting some factors in this regard within biomedical imaging. The study has further identified the extent to which the medical and scientific authorities are involved in this matter and respond to such factors in terms of health risks. The aspects of risk assessment and evaluation along with the ethical acceptability have been further discussed.

Posted in Uncategorized

Leave a comment

Review article on ‘Stem Cells and Cardiac Repair’

This is a review article on the paper Stem cells and cardiac repair: alternative and multifactorial approaches in the Journal of Regenerative Medicine & Tissue Engineering.

The heart can become improper in its functioning due to severe myocardial infarction (MI), which can be due to the ventricular remodeling and progressive contractile dysfunction. Different pharmacological and surgical interventions can only make the symptoms less severe, but also fails when it comes to regenerating dead myocardium. Stem cells have the ability to replace or repair dead or injured cells after myocardial infarction. Either stem cells can be integrated or they can direct a pathway and a procedure to transmit molecular signals to a targeted tissue without actually entering into the tissue themselves. Clinical studies have shown that this may show an entire improvement in the working of the heart. Another approach, Endogenous cell homing, shows an alternative procedure for stem cell transplantation. The identification of such factors and proper regulation of signaling between the bone marrow, circulation and the myocardium that has been infarcted are extremely important in the synchronization of the processes of mobilization, incorporation, survival, differentiation, and proliferation of the stem cells. Such experimental trials are hoped to provide successful treatment strategies in the clinical field.

There are different subtypes of stem cells, such as the embryonic stem cells and the somatic stem cells. These can be differentiated into cardiomyocytes. For the bone marrow mononuclear cells (BM-MNCs), these preserved the contractile potential of adult cardiomyocytes. In patients with MI, BM-MNCs, which were directly infused to the related artery infarction through the angioplasty balloon catheter significantly, improved the left ventricular ejection fraction after 6 months. But, a number and proliferation of stem cells were found to be decreasing in the elderly patients, which was one reason for the poor outcomes of clinical trials in old patients, although BM-MNCs therapy is shown to be more effective in ageing and diabetic individuals.

To do such clinical trials over animals, one must induce an MI into the animal. Simply, the animal should be in an ischemic condition for testing. The mesenchymal stem cells (MSCs) were directly injected into ischemic rat, which decreased fibrosis, apoptosis and left ventricular dilatation which resulted in the relieved the systolic and diastolic cardiac dysfunction with no significant effect on myocardial regeneration.

Progenitor cells have shown good results when they are used to treat hibernating cardiomyocytes.

The cardiac stem cells (CSCs) have the potential to proliferate and differentiate into cardiomyocytes and, to a lesser extent, into smooth muscle cells and endothelial cells. For the stem cell therapy, these cells need to be harvested, cultured and delivered to the infarcted heart. In a previous study, injection of CSCs into infarcted myocardium revealed an increase in the activity of the enzyme Akt, a decrease in caspase 3 protein’s activity and apoptosis, and an increase in capillary density.

Adult stem cells can be harvested in vitro and injected locally into the infarcted recipient. However, the in vitro expansion of stem cells and their in vivo delivery have restrictions due to the limited availability of the stem cell sources, the excessive cost of commercialization and the anticipated difficulties of clinical translation and regulatory approval.

In the Endogenous cell homing process, the difficulties faced by stem cell transplantation can be bypassed. Homing usually refers to the ability of a stem cell in finding its way to a particular anatomic destination, sometimes even over great distances, from the bone marrow through the bloodstream. There was a case, in which a male patient had received a heart from a female donor. It showed that the Y-chromosome containing cardiomyocytes had integrated into the myocardium and had made up 7 to 10% of those in the donor hearts and proliferated. Hence, circulating bone marrow-derived cells can be recruited to the injured heart and differentiate into cardiomyocytes.

Homing is a multistep cascade including the initial adhesion to the activated endothelium or exposed matrix through selectin (cell adhesion molecule), migration through the endothelium and finally, migration and invasion of the target tissue.

The identification of such factors and proper regulation of signaling between the bone marrow, circulation and the myocardium that has been infarcted are extremely important in the synchronization of the processes of mobilization, incorporation, survival, differentiation and proliferation of the stem cells.

Granulocyte colony stimulating factor (G-CSF) is currently the most widely used agent in clinics for stem cell mobilization, which affects mobilization mechanism of the stem cells as well as the release of further cytokines and growth factors.

Skp, Cullin, F-box containing complex also known as SCF, its elevated level in the circulation would enhance mobilization, incorporation, proliferation, differentiation and survival of BMSCs in the infarcted heart, which are essential steps in stem cell-mediated repair.

The Fibroblast growth factor (FGF) is speculated to maintain MSCs self-renewal.

The Hepatocyte growth factor (HGF), its mechanism in the repair mediated by stem cells lies in its potential to create an adhesive microenvironment in the heart after the recruitment of stem cells there

The Tumor necrosis factor alpha (TNF-α), its use in stem cell mediated cardiac repair can be valuable if timed and located in a proper manner, but can be harmful if it Is not timed and located in a proper manner.

Interleukin 8 (IL-8) does have an inflammatory effect, but it can induce stem cell mobilization.

Recent researches have provided information that many drugs, peptides, proteins, and various factors can increase the number and functional activity of stem cells. Combined pharmacological and stem cell therapy, administration of combined drugs therapy can improve the mobilization, incorporation, differentiation and proliferation of stem cells.

Different studies and test were conducted upon mouse and rats which were induced with MI. In these tests different methods of injecting different factors, proteins, etc. were performed to see the functioning of the cardiomyocytes getting better or not.

The Statin drug decreased cardiomyocyte hypertrophy and interstitial fibrosis, which was tested in a myocardial infracted mice. The ACE inhibitors were also induced in MI mice, which showed enhancement of bone marrow stem cell migration. These were the pharmacological methods. In the gene modified stem cell modified MSCs were induced in MI rats, which showed increased anti-apoptotic ability, cardiprotection, survival rate and angiogenesis.

Then, MSCs with hypoxia were introduced in MI mice, which showed an increase in survival and paracrine mechanisms. Then MSCs with growth factors were induced in MI rat, which increased the apoptotic ability. Then MSCs with diazoxide were induced in MI female rat, which increased its protection.

Posted in Uncategorized

Leave a comment

Speak for your lips are yet free, Speak for the truth is still alive. (Faiz Ahmad Faiz)

The name Faiz is a rebellious wave through which the contents of cruelty are taken far away. There were many scenarios which lead Faiz Ahmed Faiz to compose rebellious poetries. But before going upon his poetries, I would like to discuss those scenarios.

Faiz accepted his first job offer as a lecturer of English at Mohammdan Anglo Oriental College in 1935, Amritsar. At that time, the entire world, including the subcontinent, was under a sharp attack of emotions from the Great Depression, which had started from the Wall Street crash in 1929, America, which had spread rapidly around the world and due to which many lands were being stalked by unemployment, poverty and fear. Faiz, later on wrote about his emotions of accepting any jobs that came his way in those difficult times. He taught at MAO College from 1935 to 1940. During this period of time, he came across such amazing intellectuals. He became friends with them, and with their help they authored a collection of short stories, titled as ‘Angare’, which had received instant notoriety, as they introduced a very different way of writing.

This was an extremely fierce attack on the society, at that time. The book became a center of attention, due to which the idea of all India’s Progressive Writers’ Association was born, which later on developed into a literary movement. And that movement was the reason to give birth to the most tremendous talents’ of the 20th century in Urdu Language. A rebellion had begun, the very instant the book Angare was published. This was the first step taken to start a rebellion, which was brought by Faiz. The book Angare was banned by the British Government.

In the years leading up to 1947, Faiz and most of the intellectuals were more concerned about the freedom from the colonial rule. For them, this matter was more important than the partition. Faiz once said that everybody had a vague idea about wanting independence form the British Government.

Faiz at this time was the editor of ‘The Pakistan Times’. His expressions, the ones seen in his poetries, were also seen in his paper. He wrote many essays and editorials filled with grief over the terrible killings which were happening in those days.

The message which Faiz was conveying was definitely of attaining freedom, but not just physically, mentally too. He wanted free minds, such free minds that can easily differentiate the good and the bad. He wanted to drill this concept into the minds of the people that it is necessary to stand up to something which is wrong. If our blood does not rage from seeing such cruelty and unfairness, then it means that we are not a live nation. It is the flush of youth, if it is not of service to the motherland. An honest man has nothing to fear.

Speak; a little time suffices,

Before the tongue, the body die

Posted in Uncategorized

Leave a comment